About a year ago, I was tasked with greatly expanding our url rewrite capabilities. Our file based, nginx rewrites were becoming a performance bottleneck and we needed to make an architectural leap that would take us to the next level of SEO wizardry.

In comparison to the total number of product categories in our database, Stylight supports a handful of “pretty URLs” – those understandable by a human being. With http://www.stylight.com/Sandals/Women/ you have a good idea what’s going to be on that page.

Our web application, however, only does URLs like http://www.stylight.com/search.action?gender=women&tag=10580&tag=10630. So, nginx needs to translate pretty URLs into something our app can find, fetch and return to your browser. And this needs to happen as fast as computationally possible. Copy/paste that link and you’ll see we redirect you to the pretty URL. This is because we’ve discovered women really love sandals so we should give them a page they’d like to bookmark.

We import and update millions of products a day, so the vast majority of our links start out as “?tag=10580”. Googlebot knows how dynamic our site is, so it’s constantly crawling and indexing these functional links to feed its search results. As we learn from our users and ad campaigns which products are really interesting, we dynamically assign pretty URLs and inform Google with 301 redirects.

This creates 2 layers of redirection and doubles the urls our webserver needs to know about:

- 301 redirects for the user and bots: ?gender=women&tag=10580&tag=10630 -> /Sandals/Women/

- internal rewrites for our app: /Sandals/Women/ -> ?gender=women&tag=10580&tag=10630

So, how can we provide millions of pretty URLs to showcase all facets of our product search results?

The problem with file based, nginx rewrites: memory & reload times

With 800K rewrites and redirects (or R&Rs for short) in over 12 country rewrite.conf files, our “next level” initially means about ~8 million R&Rs urls. But we could barely cope with our current requirements.

File based R&Rs are loaded into memory for each of our 16 nginx workers. Besides 3GB of RAM, it took almost 5 seconds just to reload or restart nginx! As a quick test, I doubled the amount of rewrites for one country. 20 seconds later nginx finished reloading now running with 3.5GB of memory. Talk about “scale fail”.

What are the alternatives?

Google searching for nginx with millions of rewrites or redirects didn’t give a whole lot of insight, but I eventually found OpenResty. Not being a full-time sysadmin, I don’t care to build and maintain custom binaries – had someone else packaged this?

My next search for OpenResty on Ubuntu Trusty led me to lua-nginx-redis – perhaps not the most performant solution, but I’d take the compromise for community supported patches. A sudo apt-get install lua-nginx-redis gave us the basis for our new architecture.

As an initial test, I copied our largest country’s rewrites into redis, made a quick lua script for handling the rewrites and did an initial head-to-head test:

I included network round trip times in my test to get an idea of the complete performance improvement we hoped to realize with this re-architecture. Interesting how quite a few URLs (those towards the bottom of the rewrite file) caused significant spikes in response times. From these initial results, we decided to make the investment and completely overhaul our R&Rs infrastructure.

The 301 redirects lived exclusively on the frontend load balancers while the internal rewrites were handled by our app servers. First order of business would be to combine these, leaving the application to concentrate on just serving requests. Next, we set up a cronjob to incrementally update R&Rs every 5 minutes. I gave the R&Rs a TTL of one month to keep the redis db tidy by automatically dropping unused rewrites. Weekly, we run a full insert which resets the TTL. And, yes, we monitor the TTLs of our R&Rs – don’t want all of them disappearing over night!

The performance of Lua and Redis

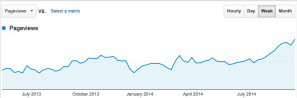

We launched the new solution in mid-July. To give some historical perspective, here’s a look at our pageviews over the last 18 months:

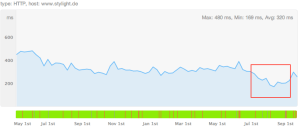

And our average response time during the same period:

As you can see, despite rapidly growing traffic, we saw significant improvements to our site’s response time by moving the R&Rs out of files and into redis. Reload times for nginx are instant – there are no more rewrites for it to load and distribute per worker – and memory usage has dropped below 900MB!

Since the launch, we’ve double our number of R&Rs. Checkout how the memory scales:

Soon, we’ll be able to serve all our URLs like http://www.stylight.com/Dark-Green/Long-Sleeve/T-Shirts/Gap/Men/ by default. No, we’re not quite there yet, but if you need that kinda shirt…

We’ve got a lot of SEO work ahead of us which will require millions more rewrites. And now we have a performant architecture which supports it. If you have any questions or would like to know more details, don’t hesitate to contact me @danackerson.

Discover more from Agile Web Operations

Subscribe to get the latest posts sent to your email.

2 thoughts on “Supporting Millions of Pretty URL Rewrites in Nginx with Lua and Redis”

Comments are closed.